The Era of Exascale Computing Has Arrived. What Does That Even Mean?

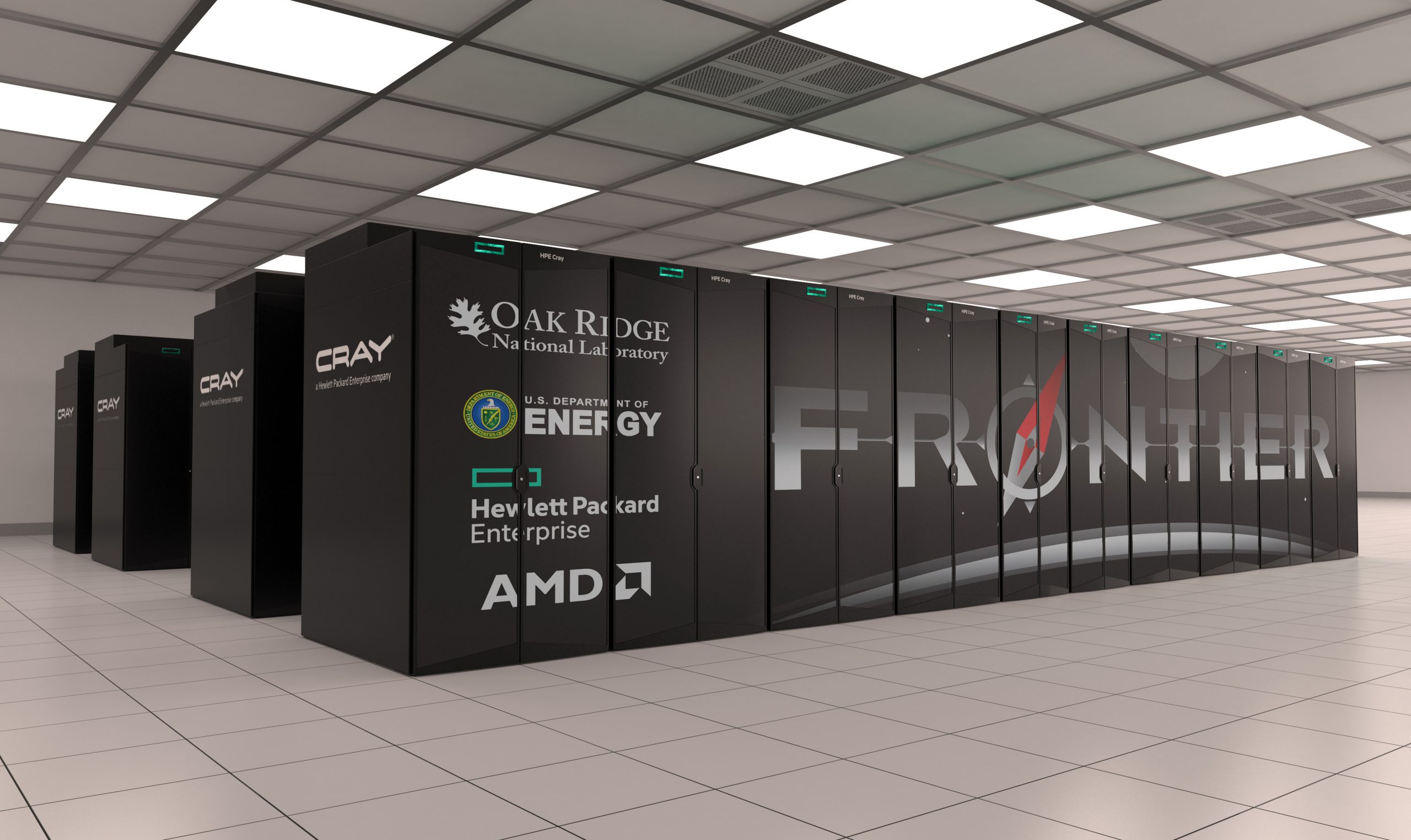

On May 30, Hewlett Packard Enterprise (HPE) officially announced Frontier:

Frontier, a new supercomputer that HPE built for the U.S. Department of Energy’s Oak Ridge National Laboratory (ORNL), has reached 1.1 exaflops, making it the world’s first supercomputer to break the exascale speed barrier, and the world’s fastest supercomputer, according to the Top500 list of world’s most powerful supercomputers.

Frontier also ranked number one in a category, called mixed-precision computing, that rates performance in formats commonly used for artificial intelligence, with a performance of 6.88 exaflops. Additionally, the new supercomputer claimed the number one spot on the Green500 list as the world’s most energy efficient supercomputer with 52.23 gigaflops performance per watt, making it 32% more energy efficient compared to the previous number one system.

FLOPS or floating point operations per second is a measurement of computing performance particularly important in the field of scientific computing where floating point operations are a requirement. An exaflop translates to 10^18 flops and 1.1 exaflops equates to 1,100,000,000,000,000,000 flops. That is a lot of operations per second!

There are so many moving pieces to Frontier and I am proud to say that I am part of a smaller team that works on the Lustre file system that runs on the Cray Clusterstor E1000 storage system on the backend of Frontier. So, what does this mean?

Frontier ushers in a new era of scientific discovery and engineering breakthroughs at exascale speed.

In the end, it is more than a speed milestone or a system size. To steal an excerpt from the HPE website:

First, uncontrolled data growth is driving organizations of all sizes to data-intensive computing. Second, digital transformation has become a business imperative. And third, the number of HPC and AI workloads is exploding.

In response to these trends, AI, analytics, IoT, simulations, and modeling workloads are all converging into one business-critical workflow—a workflow that must operate at extreme scale and in real time.

These trends and realities are forcing a complete rethinking of compute, networking, software, and storage architectures. The resulting capabilities will take research and enterprise computing beyond super and into the exascale era.

[ … ]

This kind of supercomputing helps us simulate and analyze the world around us at an unprecedented level. Exascale systems will help solve the world’s most important and complex challenges.

Anyway, I am very excited about this and what the future holds for this technology.

![Random [Tech] Stuff](https://koutoupis.com/wp-content/uploads/2022/01/koutoupis-logo-3.png)